Evidence-Centered Design and Learning Performances

To address the goal of formative assessment aligned with the NGSS, we use evidence-centered design (ECD) (Mislevy & Haertel, 2006) to systematically unpack NGSS Performance Expectations into multiple components that we call learning performances, which can guide formative assessment opportunities. Our learning performances constitute knowledge-in-use statements that incorporate aspects of disciplinary core ideas, science and engineering practices, and crosscutting concepts that students need to be able to integrate as they progress toward achieving larger end-of grade-band performance expectations. Our design process, described below, enables us to derive a set of learning performances from a performance expectation in a principled way.

Design Process Overview

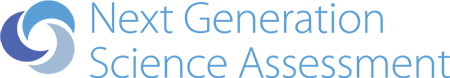

Our design process involves (1) identifying performance expectations, (2) unpacking of the three NGSS performance dimensions in the performance expectations, (3) developing integrated concept maps, (4) articulating learning performances and specifying design patterns for assessment tasks associated with the learning performances, and (5) and (6) developing tasks and scoring rubrics. See Recommended Reads to learn more.

1. Identify Target Performance Expectations

We first identify a performance expectation or a clustered set of performance expectations.

2. Unpacking of Performance Dimensions

Our unpacking process entails gathering substantive information about how knowledge and skills are acquired and used in the domains for purposes of designing assessments:

- We unpack the disciplinary core ideas by elaborating and documenting the meaning of key terms, defining expectations for understanding at the targeted grade band, determining assessment boundaries for content knowledge; and identifying background knowledge that is expected of students to develop a grade-level-appropriate understanding of a disciplinary core idea.

- We unpack the science and engineering practices by defining the core aspects of the practices, identifying intersections with other practices, and articulating the evidence required to demonstrate the knowledge, skills, and abilities associated with the practices.

- We unpack the crosscutting concepts by defining their core aspects, identifying intersections with practices, and articulating the evidence required to demonstrate the knowledge, skills, and abilities associated with the crosscutting concept.

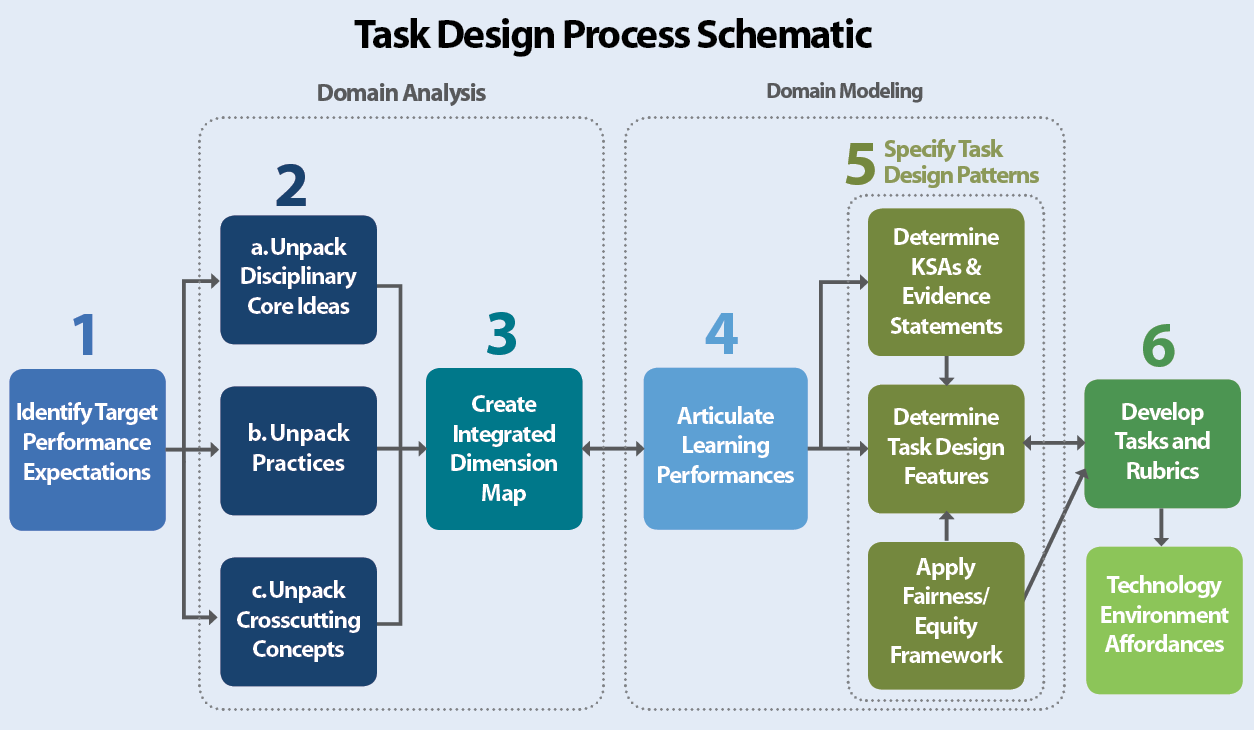

3. Develop Integrated Dimension Map

We use the elaborations in the unpacking process to develop integrated concept maps that lay out the conceptual terrain for fully achieving each performance expectation. These integrated concept maps describe the essential disciplinary relationships and link them to aspects of the targeted crosscutting concepts and science and engineering practices. These maps are essential to the principled articulation of learning performances that integrate the performance dimensions and coherently represent the target performance expectations.

4. Articulating Learning Performances

Building on the relationships identified in the integrated concept maps, we articulate sets of learning performances corresponding to each performance expectation. Multiple learning performances work together to build toward a performance expectation in a way that can inform a teacher about a student’s progress over time. Each learning performance integrates aspects of a disciplinary core idea, a science practice, and a crosscutting concept in a way that both supports instruction around the performance expectation and provides evidence of a student’s proficiency with the performance expectation.

5. Specifying Design Patterns

We next specify a design pattern for each learning performance. Assessment designers can use design patterns to develop assessment tasks that are aligned with the target proficiencies. The design patterns describe task features that are necessary to elicit evidence of student proficiency and include the following components:

- Articulating knowledge, skills, and abilities (KSAs). For each learning performance, we articulate the focal KSAs representing the specific performance constructs that are to be assessed. We also identify additional KSAs that are needed to respond to the task but are not assessed by the task.

- Articulating assessment task design features. Design patterns include two types of task design features: (1) characteristic features of tasks, which must be present to provide the desired evidence of proficiency, and (2) variable features of tasks, which may be varied in order to shift difficulty, focus or context, or to address the needs of students with specific instructional requirements or abilities.

- Applying an equity and fairness framework. Our design patterns include task features derived from the use of an equity and fairness framework that we developed to help ensure that fairly assess students across social and cultural groups.

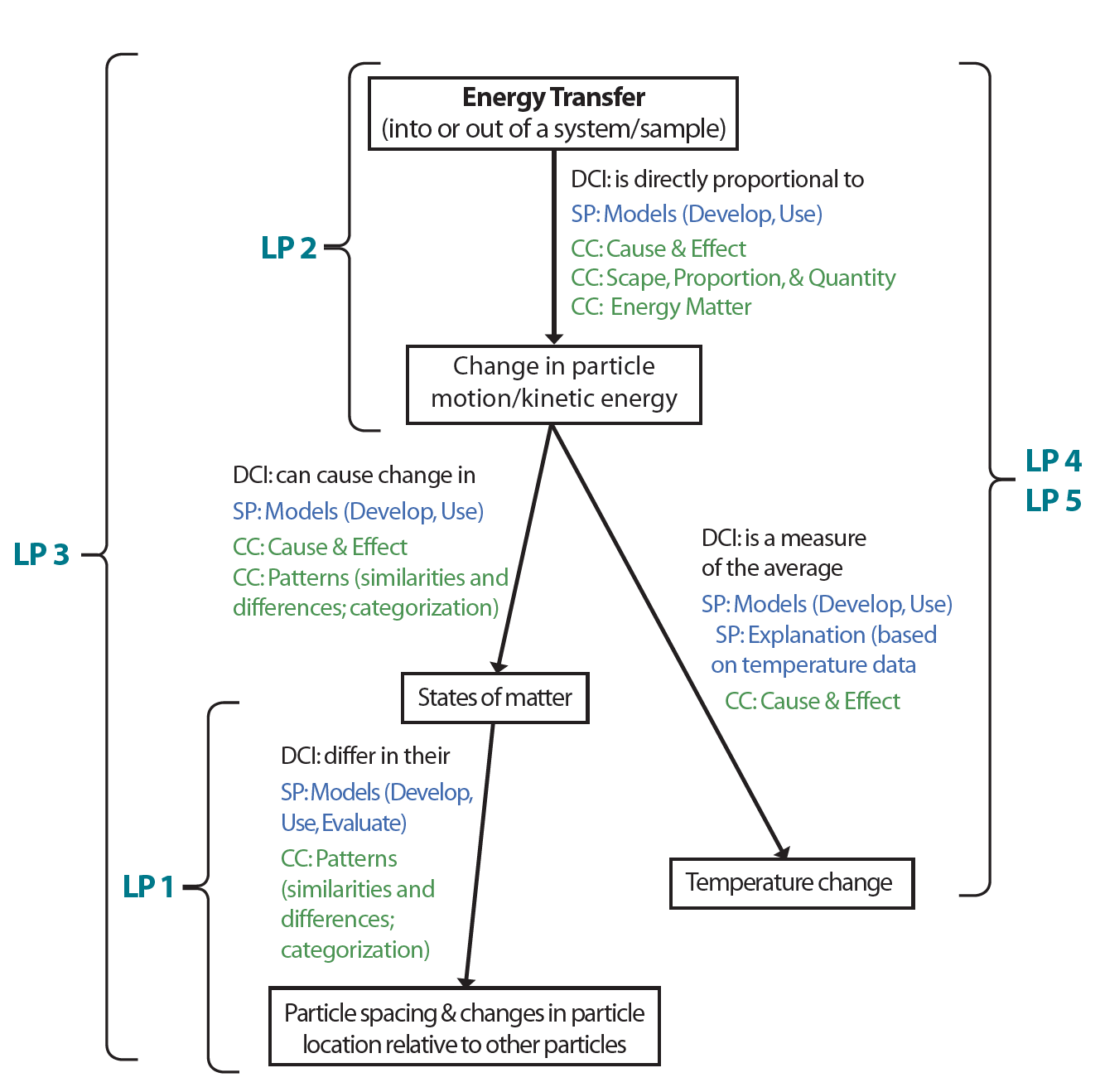

6. Example Task

The final phase of the design process uses the design patterns to construct assessment tasks aligned with each learning performance. The task designs varies the task features, allowing for the development of multiple tasks within a ‘family’ that vary in difficulty level while maintaining alignment with the learning performance.

6. Rubric Development

The rubric development approach centers on the development of multiple rubric components, each of which corresponds to distinct aspects of proficiency of interest to teachers for classroom assessment. The rubrics are intended for use by researchers and aim to promote high scoring reliability. We use the focal KSAs and evidence statements from the design patterns to develop a scoring rubric for each assessment task. In order to keep students’ proficiencies with each focal KSA distinct from one another, each rubric component measures proficiency with a specific focal KSA and builds from the corresponding evidence statement. When scoring a student’s responses to a task, scorers would apply each rubric component to the response, obtaining a set of scores that collectively describe the student’s proficiency with the learning performance. In addition to separating multiple aspects of a student’s proficiency needed to respond correctly to a task, the individual rubric components focus scorers’ attention on specific features of a student’s responses, promoting reliability in scoring.

Do you want to…

Bring NGSA Tasks to Your School?Register |

Browse NGSA Tasks?Browse |

Collaborate, Learn more, or License NGSA Tasks?Contact Us |